Three US companies are proposing to meet the growing data processing demands of cloud-based computing and online gaming by connecting up thousands of graphics processors in ‘supercomputers’.

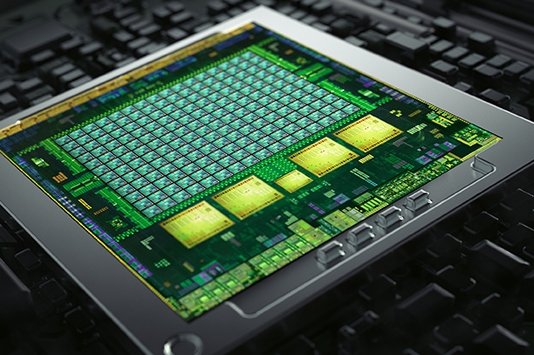

Graphics processor firm Nvidia is working with IDT and Orange Silicon Valley to develop a scalable, low-latency cluster of up to 2,000 Tegra K1 mobile processors.

For connecting the processors, the system uses RapidIO interconnect devices supplied by IDT. This supports 16Gbit/s data interfaces between processor nodes.

There will be 60 processor nodes on a 19-inch 1U board, with more than 2,000 nodes in a rack.

This can provide computing power of up to 23Tflops per 1U server, or greater than 800Tflops of computing per rack.

This is twice the computing density of the largest supercomputer, Tianhe-2 in China.

According to Sean Fan, vice president and general manager of IDT’s interface and connectivity division: “This demonstrates a breakthrough approach to addressing the tradeoffs between total computing, power and balanced networking interconnect to feed the processors.”

Each node consists of a Tsi721 PCIe to RapidIO NIC and a Tegra K1 mobile processor with 384Gflops per 16Gbit/s of data rate, or 24 floating point operations per bit of I/O.

The high speed interconnect is important because it allows each processor node to communicate with another node with only 400ns of fabric latency. Memory-to-memory latency is less than two microseconds.

For more detail: Cloud computer powered by 2,000 GPUs