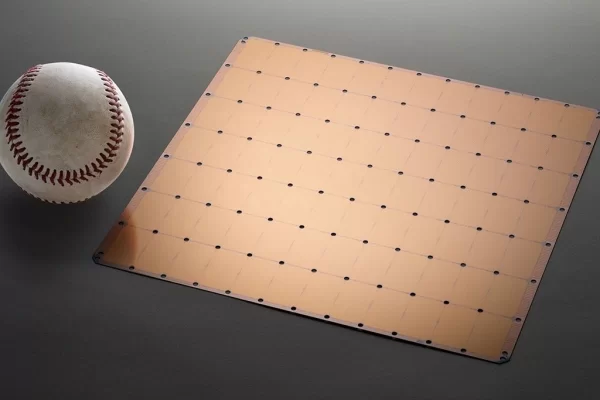

To meet the ever-increasing computational demands of AI, California based AI startup, Cerebras Systems, just recently unveiled its very first announced element claimed to be the most massive AI chip ever made. With an astounding measurement of 46,225 mm2 (up to 56.7 times more than the largest graphics processing unit) and more than 1.2 trillion transistors, the Wafer-Scale Engine from Cerebras Systems is the largest semiconductor ever built for deep learning applications.

The Wafer-Scale Engine contains about 400,000 high performance, AI optimized Sparse Linear Algebra cores. This local memory fed cores are linked together by a fine-grained, high bandwidth, all-hardware, low latency mesh communication network at an interconnect speed of 100,000 Pb/s. Unlike other processors and GPUs that are produced on silicon wafers, Cerebras’ WSE is a single chip interconnected in a single wafer designed to handle all its transistors. The WSE also houses on-chip of 18Gb superfast and distributed SRAM memory among the cores in a single-level hierarchy. By way of comparison, the WSE has 3000 more on-chip memory and more than 10,000 times the memory bandwidth.

Designed for accelerating AI work, the company claims that its Wafer-Scale Engine can scale down the time it takes to process complex data from months to minutes.

“Every architectural decision was made to optimize performance for AI work. Designed from the ground up for AI work, the Cerebras WSE contains fundamental innovations that advance the state-of-the-art by solving decades-old technical challenges that limited chip size such as cross – reticle connectivity, yield, power delivery and packaging. The result is that the Cerebras WSE delivers, depending on workload, hundreds or thousands of times the performance of existing solutions at a tiny fraction of the power draw and space,”

Read more: AI COMPUTE ACCELERATION STARTUP BUILDS THE LARGEST CHIP EVER FOR DEEP LEARNING APPLICATIONS