INTRODUCTION

HIGH-LEVEL OVERVIEW

SOCIAL IMPACT

Our plan for this project was mainly for entertainment purposes, and is not dangerous. Nevertheless, we constructed a user-friendly playable instrument that is similar to a bass guitar. As far as we know, this is a novel idea that we originated. We were able to achieve this goal by combining information discussed in previous labs with our own ideas.

In theory, our design is relatively inclusive for all types of ability. This device is one that can be held like a guitar, but can also be placed on a table or the floor to be played, meaning that users who can’t hold objects for a long time and/or in an upright position can also play. The use of a glove fitted with flex sensors makes playing our air bass easier than a real guitar since the user does not need to make full contact with the strings and their fingertips in order to produce a sound. Users still need to have two functioning hands so that they can strum using the flex sensor glove with one and simulate fret positioning with the other, but this is theoretically be easier than literal plucking and holding down strings.

Color and identifying specific shapes/patterns don’t play much of a role in our implementation, so those who have color blindness or poor eyesight should have a similar experience as those with full seeing ability. For full user experience, some hearing ability is needed—this doesn’t help with interfacing with the device itself, but improves the general functionality of our project as a way to practice guitar without physically owning one. Being able to hear the synthesized notes enhances the user’s chances to self-correct the placement of their fingers and the timing of their strumming so as to play songs that they choose.

Our project doesn’t involve any over-air communication, so there are no specific IEEE or FCC standards that we must follow. Additionally, our use of inputs and outputs that pose little to no harm to the user imply that there aren’t any major ANSI, ISO, or FDA standards that are applicable. Copyright claims are not much of a concern to our team, as the user will be responsible for any melodies they create and how they wish to share them.

IMPLEMENTATION OVERVIEW

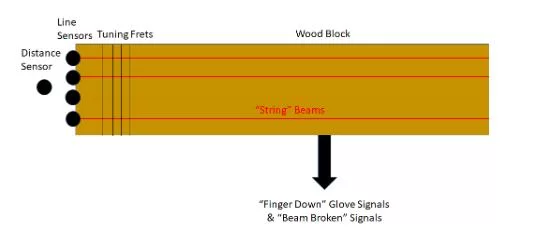

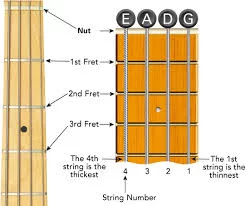

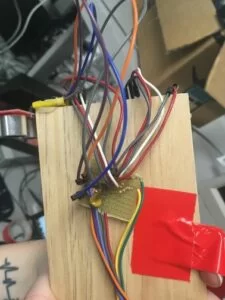

The slab of wood is used to mimic the neck of a bass guitar, as shown in Figure 1 below. To synthesize the four individual strings, we aligned four beam break sensors parallel to the length of the wood. If a finger is placed on one of the “strings”, the line break sensor registers this and it narrows down the range of potential frequencies of sound that we’d have to output. Ultimately, nothing is played until one of the strings are strummed by flexing a finger in the glove – then, a frequency is output to the DAC with an envelope that starts and stops depending on the reading from the flex sensor.

Line break sensors can only detect if an object has passed between the pair, not the location of where the object broke the line. Since the positioning of your fingers on each string of a guitar is crucial to playing a certain note, we need to analyze what finger is being placed on the “string” and at what fret.

For the latter, to determine at which fret the user is playing, we used an ultrasonic distance sensor. This sensor is placed at the end of the wood slab to measure how far down the next of the guitar the user’s hand is. This distance data is used to decide which fret the user is playing, based on the length of the neck of a guitar and the typical placement of each fret. This data is used in part to determine which frequency the user is attempting to play. Note that in our implementation, for simplicity we assume that the user is playing a single note at a time since we did not want to overcomplicate our logic.

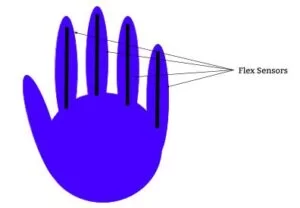

For strumming, we use a black polyester glove with short flex sensors attached to the inside of each finger, as shown in Figure 2. These sensors have a resistance of ~30KΩ when at rest and up to ~100KΩ when flexed. When the user bends a finger, we determine which finger is bent, which line break sensor has been broken, and how far down the neck the user’s hand is to determine which note is being played. This also serves as a kind of redundancy check, so that a note is played both when the string/line is broken and when a corresponding finger is bent.

Using additive synthesis, we produce approximately bass-like sounds, similar to lab 1. We use the DAC, setting the output in an ISR that is timed to accurately sample desired notes, the audio jack, and the lab speakers to play the music the user requires.

IN-DEPTH BREAKDOWN

BREAK BEAM SENSORS

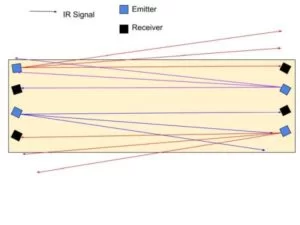

Our AirBass had four pairs of break beam sensors. These sensors are the four strings on our guitar. Each pair of break beam sensors has both an emitter and a receiver. The emitter sends an IR signal to the receiver and the receiver sends a signal to the MCU whether it has received the signal or not from the emitter. When the emitter and receiver are in line, the receiver sends a low signal to the MCU. If they pair is not inline or if something is blocking the signal, then the receiver sends a high signal to the MCU. Our circuit is shown below in Appendix C. We use a 10kΩ pull up resistor and a 1kΩ series resistor to protect the MCU to process the output signal of the receiver. Both the emitters and the receivers were powered by 3.3 V from the board.

We quickly realized that if all receivers were on one end of the guitar with all emitters on the other end, there would be interference across the pairs such that when we blocked any given receiver and emitter pair, the receiver would still output receiving a signal because of the signals from the other emitters. Each emitter and each receiver has a cone out of about 10 degrees. To try and overcome this issue, we alternated the emitters and receivers on each side of the guitar neck. This allowed there to be more distance between the emitters on each side so that the receiver would not be in the cone of an emitter that was not its designated pair. This also did not work and there was still interference. The final idea we had was to angle all of the beam break sensors to capitalize on the cone of their signal transmission and reception. We angled the E and A strings’ beam break sensors and the G and D strings’ beam break sensors about 10 degrees away from each other so the edge of each of the emitter’s cone is in the edge of the detection range of its receiver’s cone. Figure 3 shows a diagram of the beam break layout with directional arrows indicating the IR beam and the cone, illustrating how the sensors do not interfere with one another.

We have a thread called protothread_beam to keep track which of the beam break pairs are broken, and thus which one of the strings are being pressed. This thread runs every 5 milliseconds, since no human finger can move or shift faster than a few dozen Hz, let alone 200Hz. In this thread, we first read from the port Y expander. To do this we enter an SPI critical section, load the value of the port Y expander into porty_val, and then exit the critical section. Next we have four checks. Each of the checks is to determine if the beam is broken based on the bit sets within porty_val. For the E string for example, we check if (porty_val & BIT_7) != 0x80). Since the E string receiver sends the signal to pin RY7, we check if the 7th bit from the port expander is low. If the bit is low, then we assign e_string to 0, meaning that the beam is broken. If the signal was high, then we would assign e_string to -1, indicating that the E string is not pressed. We do this check for each of the four strings and assign e_string, a_string, d_string,and g_string to the appropriate values. Later in our code, we used the information if the string is pressed to determine whether we should calculate at which fret the user’s hand is placed. This is important because if the string is not broken, then we do not need to calculate where the hand it since an open string is being played.

ULTRASONIC DISTANCE SENSOR

On a guitar, the sound of the note being played changes not only based on the strings being plucked but also the fingers placed on the frets.

To determine which of the frets were being placed, we used a SparkFun SEN-15569 ultrasonic distance sensor. The general overview of this sensor is that, once triggered, eight 40kHz signals are sent out to potentially reflect off of any objects that are in front of the sensor. If a signal is reflected back, then the outputted signal (aka echo) is equal to the difference in time between the pulse and the received pulse. For this to be incorporated into our prototype, we wanted to use the ultrasonic distance sensor to detect the distance from the top of the neck of our bass guitar to the user’s playing hand. This result would then be used to determine on which fret the first finger was placed. This sensor needed a 5V power supply, which we were able to provide using the MCU Vin pin. There was no need for a voltage regulator because we knew our final prototype would use the 5V power adaptor. In the case of the PIC32 being powered with a separate battery, a voltage regulator would be needed to keep the sensor powered at a steady 5V with no sharp increases in voltage or spikes in current.

The implementation of this general process can be seen in the thread protothreads_distance. The trigger—which, when set, would start the series of pulses to read the distance to an object—was connected to MCU pin RPA1, which had been enabled as an output. As long as the define use_uart_serial statement was commented out in the config_1_3_2.h file, there was no other use for this pin, and therefore nothing preventing us from sending the trigger signal on it. Pin A1 was set high for one millisecond and then cleared. The minimum length of time that A1 needed to be set for was 10 microseconds, so the one millisecond delay was reasonable.

The actual reading from the echo signal was done using an input capture on MCU pin RB13. An input capture is a hardware timer, where the time of specified edge of a signal received by the input capture pin is measured. This provides an extremely accurate timing of events, up to within a cycle of the signal edge occuring. We decided to use this hardware timer, rather than reading the signal on a digital GPIO pin and using a counter variable, because it wasn’t as likely to hang. If we had used the GPIO pin and software timing route, our logic for the protothread would’ve kept it stuck waiting for a pulse back, thereby preventing us from setting a new trigger signal.

The code written to initialize the input capture can be found between lines 521 and 533 in main(). The input capture required a timer, as it would be used to keep track of the edge of the inputted signal. For our program, we used timer3 for this purpose, and initialized it to take continuous readings and have a prescaler value of 32. The prescaler prevented the results from the input capture to not overflow. An additional precaution for this was declaring the distance readings as unsigned integers.

Next we configured capture1 to be connected to an interrupt service routine (ISR) that would read integer timer values on timer3 due to the falling edge of a signal. Using an ISR prevented any other parts of the program from overriding the distance sensor so that we would get the most accurate readings from the input capture. Taking values from the falling edge of the signal would only provide a distance once the entirety of a returned pulse had been received. Lastly, MCU pin RPB13 was connected to capture1 using PPS.

Since the ISR would preempt any other functions that were running at the time, we took care to make the ISR as brief as possible to not let any other functions hang. This meant that the ISR only ran two lines of code: mIC1ReadCapture() to save the value off of timer3 when the reflected pulse was received; and mIC1ClearIntFlag() to clear the timer interrupt flag.

Within protothreads_distance, after the trigger signal was set for one millisecond, the interrupt flag for the capture1 ISR was cleared and timer3 was set to zero. The first function was performed so that capture1 would be accurately updated with the most recent falling edge. The second call was made so that no additional arithmetic had to be performed to find the length of time between the 40kHz pulses and the reflected pulse.

Once the ISR read from the input capture pin, an finite impulse response filter was applied to make the value more reliably accurate, otherwise we noticed a large amount of fluctuation. The averaging was done over the four most recent input capture values, which were saved into a circular buffer whose starting and ending indexes were constantly updated. The variable distance would be incremented by the difference between the most recent reading and the least recent reading in this circular buffer.

When reading the echo pin from the sensor on the oscilloscope, we noted slight noise. The theory behind this is that it’s caused by the switching within the sensor itself, most likely due to the MHz clock. At first, we placed an electrolytic capacitor between power and ground. Although it did reduce noise, it has an internal frequency of around 100kHz, leading to slow switching rates. Ultimately, we built a low pass voltage divider filter, as shown below. We made sure to use a ceramic capacitor as it has a faster internal frequency, and therefore our circuit would have a faster response.

Now that the distance reading was averaged, it had to be calibrated. After working with the distance sensor a bit, we noticed that the distance values changed based on the positioning of the object it was looking at. In the case of our prototype, this object was the side of a hand, which is extremely hard to maintain in a static position with the same amount of surface area being presented, despite the strings and/or frets it’s at. To remedy this, we spent a decent amount of time measuring the distance sensor’s output across various combinations of depressed strings and frets. The calibration data for this can be seen in the table in Results.

FLEX SENSORS

In order to accurately determine when individual strings of our bass are “plucked,” we used the SparkFun SEN-10264 two-inch flex sensors. At a high level, the idea for implementing these sensors is that we could use the variable resistance across the terminals in a voltage divider circuit, so that the output voltage could be used to determine which of four strings is being plucked (one string corresponding to each flex sensor). The result is that we can accurately pluck open strings, start and stop playing individual notes independent of the fret/finger position decided by the beam breaks and distance sensor, as would be the case in a real bass. To further liken the experience of our AirBass to an actual bass guitar, we have the flex sensors attached to the inner palm of a glove so that the integrated system can register moving a finger while wearing the glove, and begin playing the desired note. The final setup is shown below.

To construct our voltage divider (to divide the supply Vcc = 3.3V when flexing the device), we first measured the resistance of the flex sensors. We found that they have an unflexed resistance of about 30K, which approximately linearly increases to about 100K when fully flexed. Noting these values, we decided to put the flex sensor in parallel to the output and chose a series resistance Re = 50K to Vcc, since this gives an output voltage that ranges between ~1.2V and ~2.2V, which is appropriate for 3.3V level logic.

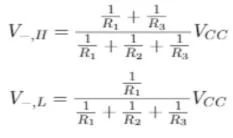

Next, we noticed that for our final implementation we only cared about a true/false value answering the question “is string i being plucked?” for each of the four strings i. Accordingly, we tried a few circuits to convert this analog output voltage (ranging ~1.2V to ~2.2V) to a digital high or low signal. Initially, we attempted to use the voltage divider output for triggering an open drain FET (i.e. with the source grounded and the gate this voltage divider output), since then we could easily pull the output voltage up to the MCU’s power rail. We abandoned this approach for two reasons: (1) the FET took relatively long to trigger and we saw an approximately exponential rise time on the oscilloscope, which is no-nideal here since we need a fast response to play the bass realistically, and (2) to avoid any mechanical effects of the sensor and make it “easier” to pluck the strings, we decided we needed some hysteresis in our trigger circuit so that a user would have to intentionally unflex a finger to stop plucking the string rather than this kind of event happening accidentally. Hence, we used a Schmitt trigger.

As shown in Figure 8 and in the circuit schematic in Appendix C, the output of our voltage divider is the input voltage for an inverting Schmitt trigger, on the inverting terminal of the amplifier. The resulting circuit, with resistance values as stated above, has a high threshold of 2.1V and a low threshold of 1.5V. That is, when the output is currently low (sitting at 0V) and the input (V-) to the inverting terminal goes above 1.5V, the circuit triggers and brings the output to 3.3V. When the output is currently high and the input goes below 2.1V, the circuit triggers and brings the output to 0V. Since our voltage divider ranges over values ~1.2V to ~2.2V, these thresholds are within our capabilities and we effectively have an integrated circuit whose output will be digital high when the sensor is flexed and low when it is not flexed (with some hysteresis). For our final design, we have four of this circuit (one for each string/flex sensor) and in practice, we saw that while this did work reasonably well, for some of the sensors unflexing to go back below the high threshold was a little difficult. Not all of the sensors have the same range of resistances, and for one sensor in particular it was difficult to make the digital high output go low again, while it worked like a charm for the others.

FINAL INTEGRATION

All of the aforementioned sensors had their inputs and outputs combined to produce a series of synthetic bass notes based on the user. Each thread for the sensor set variables that were used to properly determine the frequency of this sound, as well as when it occurred and for how long.

Within main(),timer2 was configured to interrupt 909 times a second (equal to 40MHz divided by our hardcoded sampling frequency, 44kHz). To make sure the big board would be outputting to the DAC pins, the chip select was also set by making MCU pin RB4 an output. Then sine lookup table was calculated. To do this, we first created a table of regular sine values sine_table, to cover a complete cycle of a sine wave with sine_table_size datapoints, and then created a new table sine_table_harmonix also covering a complete cycle, adding five harmonics with hardcoded weights to the original wave to produce a more string-like sound. We found these weights on the DAC page of the ECE 4760 website. Each value of sine_table_harmonix was then scaled to be within the inclusive range of [-1,1], much like the original sine table created earlier in the function. Lastly, the phase increment array ph_incr was filled with frequency values that matched the specific string/fret combinations of a bass guitar—this array contained 23 values, as each string had an open frequency and six frets, and each string was separated by fifth (such that playing the fifth fret on a string would produce a sound equivalent to the next open string).

The DAC ISR, connected to timer2, was the final piece of the puzzle. First it cleared the timer2 interrupt flag. Once that was done, conditional logic was used to determine the frequency, from ph_incr and sine_table_harmonix, that would be output to the DAC. First, it had to be seen if at least of the strings had been “plucked”, as determined by the flex sensor glove.

The flex sensor outputs were read using MCU pins Z4,Z5,Z6,Z7 on the port expander. In an SPI critical section we read the current Z port into a local variable and outside this critical section checked individual bits of the word to determine which flex sensors were flexed and which were not. We set integers e_pluck, a_pluck, d_pluck, g_pluck to 0 or -1 depending on which bits of the word were high to indicate whether or not a sensor was being flexed.

If a string had been plucked, the phase variable for that specific string (e_phase, a_phase, d_phase, g_phase) was incremented. The value to increment each phase variable by was at index 5i*i_fret within ph_incr, where the i in 5i corresponds to each string represented as an integer (E = 0; A = 1; D = 2; and G = 3) and the i in i_fret corresponds to each string (E, A, D, G). The reason for the former definition of i is to nail down the fact that the strings are separated by fourths within ph_incr, and therefore the next open string frequency can be found at every fifth element of the table. The latter definition stems from the readings from the ultrasonic distance sensor.

The distance ranges from the ultrasonic distance sensor were used in a series of conditional statements to set the fret for each individual string being depressed: e_fret, a_fret, d_fret, and g_fret. If none of the four strings were depressed (meaning that zero of them had their beam break broken), then all four fret variables were set to zero, indicating an open string. If at least one of the four strings were being depressed, then the fret variables were set if 1) that specific string was depressed and 2) the distance sensor reading was within the range for that fret. Since the farther frets would have larger distance readings, those ranges were used in earlier if statements. The reasoning behind this was that it was that a higher distance range was easier to a chieve, and so by putting these ranges earlier, it ensured that lower distance readings wouldn’t accidentally be set to the wrong fret. For example, on the E string, the first fret was expecting a distance reading of less than 120—if the logic for setting the first fret came before the logic for setting the second fret, then the second fret would be the final (yet wrong) result.

Cascading ranges (which checked to see if distance was less than the calibration value) were used rather than constricted ranges (which checked to see if distance was both greater than a calibration value and less than another calibration value). This was because of the variation between the boundaries of each fret. We felt that using cascading ranges would cut down on the amount of accidental fret switching due to the ultrasonic distance sensor not being as precise as possible.

The variable sum_strings was used to store the sine values from sine_table_harmonix. Similar to the logic to find the phase variable for each string, if a string had been plucked, sum_strings was set to the value in sine_table_harmonix indexed at the string’s phase variable (which had been shifted by 24 to prevent the index from going out of bounds of the table).

Now that the frequency of the sound had been set, it was time to set the amplitude envelope of the sound wave itself. This was also based on the flex sensor glove output. Boolean state variables string_depressed and prev_string_depressed indicated whether or not one of the four strings is currently being plucked or was being plucked in the previous read to the Z port. If we transitioned from nothing plucked to some string being plucked, we started timing a new note/amplitude envelope by setting current_amplitude to 0 and curr_attack_time to 0 as well. Similarly, if we transition from something plucked to nothing being plucked, we set current_amplitude to max_amplitude and curr_decay_time to 0.

In the audio ISR, even more conditional logic is used around string_depressed and prev_string_depressed. If at least one string was depressed and curr_attack_time had not reached its maximum, this meant that the sine wave hadn’t reached its maximum amplitude, and therefore curr_attack_time and current_amplitude were increased accordingly. Otherwise, if the curr_attack_time was at maximum value, the current_amplitude was set to max_amplitude for redundancy. If no strings were depressed and curr_decay_time had not reached its maximum, this meant that the sine wave hadn’t reached its minimum amplitude, and therefore curr_decay_time was incremented and current_amplitude decremented. Otherwise, if the curr_decay_time was at maximum value, current_amplitude was set to 0 (the minimum sine wave amplitude) for redundancy. The attack portion of the sine wave increased linearly, while the decay part of the sine wave decreased linearly. The result of this logic gives the ability to play sustained notes with the attack and decay part of the envelope so that it sounds less harsh and ideally more like an actual bass.

The final result of AirBass was sent to MCU pin DAC_A, which was the sum of 2048 and the product of current_amplitude and sum_strings. Since sine_table_harmonix is between the range of -1 and 1, so is sum_strings. Multiplying this variable by current_amplitude moves it to the range -208 to 2048. Adding 2048 further modifies this range to 0 to 4096 so that the DAC can properly receive the sine wave. This was then written to SPI to actual produce the sound, after which the SPI was free to be used by either the port expander or the DAC. To prevent any unwanted collisions on the combined use of SPI from the DAC and the port expander, we made sure to use SPI critical sections wherever needed and to limit the amount of code within them, so as to not take too much time.

An audio jack was soldered from the output of DAC_A to be plugged into a speaker so the user could hear the produced sound. There was minimal crackling, so we added a low pass filter between DAC_A and the audio jack to keep the sound as smooth and steady as played by the user.

RESULTS

As shown in our final demo video, we were able to put together a functioning prototype for AirBass. It implemented the break beam, ultrasonic distance, and flex sensors we set out to use at the start of this six-week endeavor. Despite the output of each being fairly simple, it took a lot of work to fully understand each of the sensors, as well as what (if any) accompanying circuits were necessary.

The final result experienced no lag when producing a sound, whether it was an open string (a plucked string with no finger placement breaking a beam sensor) or a fingered note. The produced sounds matched the expected frequency and amplitude, no matter the string/fret combination, when checked on the oscilloscope.

One of the shortcomings we experienced was due to the calibration of the distance sensor. If the user’s hand was tilted differently than how it was when calibrating—reducing or increasing the amount and angle of the surface area of the side of their hand—the distance measured would change slightly. This slight change, depending on the distance of the fret, would greatly affect which fret the program would detect as being played. For example, as shown in the table below, the second fret of the A string was at a distance value less than 140. If the user’s finger was placed on the second fret but their hand was tilted more towards the distance sensor than previously, the distance sensor would see a pulse reflected back quicker, therefore reading a smaller distance than expected. This could drop the distance down below 120, playing on the first fret. If the user’s hand was tilted away from the distance sensor, the third fret could be played instead.

This issue was reduced when the calibration was specified based on the string, after we recognized that the positioning of the user’s hand would change based on how far their fingers had to stretch to break the appropriate beam. However, as discussion in Conclusions, there could’ve been more hardware fixes made to further refine this aspect of the project.

A second shortcoming was the flex sensor glove. Although it worked correctly, outputting a low signal when a finger was fully flexed and a high signal when unflexed, it required a lot of precise effort to bend individual fingers without disturbing the others. If a finger was accidentally flexed somewhat, that string’s sound could preempt the intended note. On one hand, this is something every guitar player needs to master in order to play the intended melody or riff, but on the other, the glove could’ve been configured in a more beginner-friendly way.

The final shortcoming was the physical layout of the guitar itself. Originally, we wanted to have eight frets on each string, laid out every inch. We had to reduce this to six frets when we realized that there wouldn’t be room for the user’s hand to play the seventh and eighth frets, due to the proximity and size of the beam break sensors. If we had more time, we’d reposition the beam breaks farther back, or perhaps even nestled inside of the wood, so that there would be more room for a player’s hand to comfortably reach all of the frets. Additionally, the neck of the guitar was very wide and could make it somewhat difficult to reach the farther strings, depending on the size and length of the user’s hand. This dimension was necessary so that none of the break beam receivers/emitters overlapped each other and detected a broken beam incorrectly, but made for somewhat awkward playing.

Despite this, we ended up with a finished product that played the correct notes for the intended duration. The prototype is safe, in that all circuits and sensors are properly mounted and covered. The sensors themselves don’t pose any danger to the user, so the main concern was making sure that they were all securely fastened onto the board. An ergonomic addition was the strap we made, after realizing that it was inconvenient to expect the user to balance the wood plank on their arm while also controlling the flex sensor glove. This made it easier for the user to play sitting or standing.

CONCLUSIONS

FUTURE IMPROVEMENTS

Although the distance sensor did perform as wanted, the calibration of the sensor could’ve been improved. The majority of our work with the sensor was finding the distance values at each fret and changing those values as we recognized the natural way a user would hold our prototype. As mentioned earlier, the human hand isn’t a flat, regular surface, so even tilting the playing hand slightly would affect the distance reading. Although we tried to alleviate this by extending the distance ranges for each fret, our final prototype did experience slight toggling between note frequency when fingers were placed on the boundary between two frets. A fix for this would’ve been adding multiple distance sensors, perhaps one for each string (to accommodate for the different readings at each string position) or a single one at the other end of the neck of the guitar (for calibrating against the difference of the two recorded values). Another fix would be to add a software median filter when reading the distance from the sensor. In the case where the sensor is inconsistent, not the user, a median filter would find the true distance the users hand is placed better than our averaging filter because our averaging filter cannot properly respond to incorrect spikes or valleys in the signal.

The flex sensor glove experienced hysteresis when flexing or unflexing each finger, meaning that the user had to fully unflex a finger to un-pluck it. This could’ve been remedied by adjusting the thresholds of the Schmitt trigger so that a partially un-flexed finger would be enough to drive the output high. Not only would this prevent the user from having to strain their individual fingers, but it would also be more authentic to the plucking motion of the glove. Additionally, moving the glove sometimes caused the soldered circuits to pop out pins. This was because the glove was connected directly to the glove with solid core wire. One fix could be switching to stranded core wire, which is more pliable and would put less stress on the soldering and header pins on our board and additional circuits. Another (more complicated) fix would be replacing the direct connection between the glove and the board with a wireless connection, as the only messages needed to be transmitted from the flex sensors are if they’re flexed or not. This would make AirBass even more similar to an actual guitar by keeping the guitar and human relatively separate.

For the physical layout of the sensors on the plank, the beam break sensors could’ve been placed in a hollowed out section of the wood (with the receivers and emitters were visible) and farther away from the last fret. This would give the users enough wiggle room to comfortably place their hand on the last few frets without stressing about the way their hand faced the ultrasonic distance sensor or potentially knocking over the beam break sensors.

An idea we toyed with at the beginning of the project was reducing the number of emitters, since the outputted IR signal coned out and could be received by more than one receiver by the time it reached the other end of the neck of the guitar. Ultimately, we decided to go with emitter-receiver pairs that were angled away from each other, but reducing the number of emitters and still working with the angle of the emitters/receivers may have enabled us to decrease the width of the strings. This would let us shrink the width of the wooden plank and make reaching the farther strings more possible, especially for those players with disabilities or smaller and/or less nimble hands.

Lastly, although the harmonics used on sine_table_harmonix produced a string-like sound, the difference between a real bass guitar and AirBass could’ve been slimmed down even more. Implementing the Karplus-Strong algorithm when producing our DAC output has been proven in other projects to make a sound that could be mistaken for the physical plucking of a string. This would’ve turned our synthetic bass guitar sounds into notes with more of the richness and cadence of a true bass guitar string.

INTELLECTUAL PROPERTY

As far as we know, there are no intellectual property issues with our project. We used public datasheets for the sensors, designed our own circuits, and built off of code from our previous lab solutions. Though we did use references and resources to check our hardware and software implementations, nothing was directly copied without significant changes. We technically reverse engineered a bass guitar, but didn’t copy any specific guitar manufacturer’s layout or functionality with the implementation of AirBass. Instead, we worked with the general concept of a bass guitar, which isn’t covered by any patents or trademark issues.

A cursory search of the USPTO database doesn’t pull up a similar “air guitar” design with the sensors and software algorithms used. Therefore, there are patenting opportunities, if we so choose to pursue them. Our project could also be publishable, especially if the aforementioned modifications were made.

ETHICS

As a team, we fully complied with the IEEE Code of Ethics. We’ve tried to design in as ethical and sustainable a way as possible, while also avoiding conflicts of interest. When writing our proposal, we strove to make realistic goals—these goals were ultimately achieved with our final product. We’ve designed AirBass to not require any electrical or software experience, so that the user doesn’t endanger themselves when using our product. All resources used have been cited below in References, as we wouldn’t have been able to finish our project to the degree that we did without them. All team members and users of the product were and are treated fairly, during both the construction and testing of AirBass. Overall, this was an ethical pursuit, as defined by IEEE.

SAFETY AND LEGAL CONSIDERATIONS

There are no parts of AirBass that could physically or mentally injure someone. All voltages are kept as low as possible and all wire/solder connections are separated from the user, whether by electrical tape, wood, and/or wool (not with a conductive material). The sound produced by AirBass can be controlled in volume using the knob on the speaker, so the user can ensure they don’t play too loudly. No images or lights are used, so photosensitive or colorblind people won’t put themselves at risk of using AirBass incorrectly.

No radiation, transmission, conductive surfaces, or automobiles are involved in this product, nor any other hardware or software that is overseen by regulations.

COMMENTED CODE

| /* | |

| * Final Project: AirBass | |

| * Works with beam break sensors, an ultrasonic distance sensor, and flex | |

| sensors | |

| * DAC channel A has audio | |

| * DAC channel B has amplitude envelope | |

| * Author: Jackson Kopitz (jsk363), Caitlin Stanton (cs968), | |

| * Peter Cook (pac256), cf. Bruce Land | |

| * For use with Sean Carroll’s Big Board | |

| * Target PIC: PIC32MX250F128B | |

| */ | |

| //////////////////////////////////// | |

| // clock AND protoThreads configure! | |

| // You MUST check this file! | |

| // has #define use_uart_serial commented out | |

| #include “config_1_3_2.h“ | |

| // threading library | |

| #include “pt_cornell_1_3_2.h“ | |

| // yup, the expander | |

| #include “port_expander_brl4.h“ | |

| //////////////////////////////////// | |

| // graphics libraries | |

| #include “tft_master.h“ | |

| #include “tft_gfx.h“ | |

| // need for rand function | |

| #include <stdlib.h> | |

| // need for sine function | |

| #include <math.h> | |

| //the fixed point types | |

| #include <stdfix.h> | |

| //////////////////////////////////// | |

| ///////////////////////////////////// | |

| // SPI things | |

| // lock out timer interrupt during spi comm to port expander | |

| // This is necessary if you use the SPI2 channel in an ISR | |

| #define start_spi2_critical_section INTEnable(INT_T2, 0); | |

| #define end_spi2_critical_section INTEnable(INT_T2, 1); | |

| // for port expander | |

| volatile SpiChannel spiChn = SPI_CHANNEL2 ; // the SPI channel to use | |

| volatile int spiClkDiv = 4 ; // 10 MHz max speed for port expander!! | |

| //////////////////////////////////// | |

| //////////////////////////////////// | |

| // port A and port B setup | |

| #define EnablePullDownA(bits) CNPUACLR=bits; CNPDASET=bits; | |

| #define DisablePullDownA(bits) CNPDACLR=bits; | |

| #define EnablePullUpA(bits) CNPDACLR=bits; CNPUASET=bits; | |

| #define DisablePullUpA(bits) CNPUACLR=bits; | |

| #define EnablePullDownB(bits) CNPUBCLR=bits; CNPDBSET=bits; | |

| #define DisablePullDownB(bits) CNPDBCLR=bits; | |

| #define EnablePullUpB(bits) CNPDBCLR=bits; CNPUBSET=bits; | |

| #define DisablePullUpB(bits) CNPUBCLR=bits; | |

| //////////////////////////////////// | |

| //////////////////////////////////// | |

| // DAC things | |

| // A-channel, 1x, active | |

| #define DAC_config_chan_A 0b0011000000000000 | |

| // B-channel, 1x, active | |

| #define DAC_config_chan_B 0b1011000000000000 | |

| //////////////////////////////////// | |

| // === thread structures ============================================ | |

| // thread control structs | |

| static struct pt pt_print, pt_time, pt_beam, pt_distance, pt_flex; | |

| // == Capture 1 ISR ==================================================== | |

| //The measured period of the wave | |

| //Captures the echo signal from the ultrasonic distance sensor | |

| volatile unsigned int capture ; | |

| // check every capture for consistency | |

| void __ISR(_INPUT_CAPTURE_1_VECTOR, ipl3) C1Handler(void) { | |

| // read the capture register | |

| capture = mIC1ReadCapture(); | |

| // clear the timer interrupt flag | |

| mIC1ClearIntFlag(); | |

| } | |

| // == Audio DAC ISR =================================================== | |

| // audio sample frequency | |

| #define Fs 44000.0 | |

| // need this constant for setting synthesis frequency | |

| #define two32 4294967296.0 // 2^32 | |

| // sine lookup tables for synthesis: sine_table for the pure sine wave | |

| // used in frequency mode, sine_table_harmonix for additive synthesis – | |

| // harmonics added in with a choice of amplitude & option to make sawtooth | |

| // values scaled based on 6 frets per E,A,D,and G strings of a bass guitar | |

| #define sine_table_size 256 | |

| volatile _Accum sine_table[sine_table_size]; | |

| volatile _Accum sine_table_harmonix[sine_table_size]; | |

| // phase accumulator for four strings | |

| volatile unsigned int e_phase, a_phase, d_phase, g_phase ; | |

| // phase increments also tabulated to set these frequencies such that | |

| // ph_incr[i] = freqs[i]*two32/Fs, set in main. | |

| volatile unsigned int freqs[23] = {82,87,92,98,104,110,117,123,131,139,147,156, | |

| 165,175,185,196,208,220,233,247,262,277,294}; | |

| volatile unsigned int ph_incr[23]; | |

| // variables for if the given string is pressed | |

| volatile int e_string, a_string, d_string, g_string; | |

| // variables for if the string has been strummed | |

| volatile int e_pluck, a_pluck, d_pluck, g_pluck; | |

| // current fret location of hand | |

| // indices into ph_incr & decides notes being played | |

| volatile int e_fret, a_fret, d_fret, g_fret; | |

| // waveform amplitude | |

| volatile _Accum max_amplitude=2000; | |

| // tuning parameters for additive synthesis. we include the first 5 harmonics | |

| // of a given note. harmonix[i] is the amplitude of the (i+1)th harmonic when | |

| // we add it to the table sine_table_harmonix. | |

| static float harmonix[6] = {1,0.8,0,0.6,0.5,0}; | |

| // waveform amplitude envelope parameters | |

| // rise/fall time envelope 44 kHz samples | |

| volatile unsigned int attack_time=500, decay_time=500; | |

| // 0<= current_amplitude < 2048 | |

| volatile _Accum current_amplitude ; | |

| // amplitude change per sample during attack and decay | |

| // no change during sustain | |

| volatile _Accum attack_inc, decay_inc ; | |

| // using Timer 2 interrupt handler | |

| volatile _Accum sum_strings; | |

| volatile unsigned int DAC_data_A, DAC_data_B ;// output values | |

| // interrupt ticks since beginning of new note | |

| volatile unsigned int curr_attack_time, curr_decay_time ; | |

| static _Bool string_depressed, prev_string_depressed; | |

| void __ISR(_TIMER_2_VECTOR, ipl2) Timer2Handler(void) { | |

| int junk; | |

| // clear the timer interrupt flag | |

| mT2ClearIntFlag(); | |

| // logic to determine where to index into sine_table_harmonix | |

| // if the pluck variable for a string isn’t -1, the string has been plucked | |

| // as determined in protothread_flex | |

| // if a string has been plucked, the index into sine_table_harmonix is | |

| // equal to the fret measured in protothread_distance | |

| // strings are separated by fifths, hence why E,A,D,G frets are each | |

| // separated by 5 | |

| if (e_pluck != –1 || a_pluck != –1 || d_pluck != –1 || g_pluck != –1) { | |

| if (e_pluck != –1) { | |

| e_phase += ph_incr[0+e_fret]; | |

| } | |

| if (a_pluck != –1) { | |

| a_phase += ph_incr[5+a_fret]; | |

| } | |

| if (d_pluck != –1) { | |

| d_phase += ph_incr[10+d_fret]; | |

| } | |

| if (g_pluck != –1) { | |

| g_phase += ph_incr[15+g_fret]; | |

| } | |

| // variable to keep track of sine frequency from sine_table_harmonix | |

| sum_strings = 0; | |

| // logic to ensure that if a string is plucked, we index into | |

| // sine_table_harmonix based on the appropriate phase | |

| if (e_pluck != –1) sum_strings += sine_table_harmonix[e_phase >> 24]; | |

| if (a_pluck != –1) sum_strings += sine_table_harmonix[a_phase >> 24]; | |

| if (d_pluck != –1) sum_strings += sine_table_harmonix[d_phase >> 24]; | |

| if (g_pluck != –1) sum_strings += sine_table_harmonix[g_phase >> 24]; | |

| } | |

| // logic for setting the amplitude envelope | |

| // if any string is depressed (i.e. any break beam has been broken) and | |

| // the curr_attack_time is not at its max, it means the sine wave | |

| // hasn’t reached its full amplitude, so increment curr_attack_time | |

| // and current_amplitude accordingly | |

| // if no string is depressed and the curr_decay_time is not at its max, it | |

| // means the sine wave hasn’t reached its full decay, so increment | |

| // curr_decay_time and decrease current_amplitude accordingly | |

| // if any string is depressed but the max amplitude envelope has been | |

| // reached, reinforce it by setting current_amplitude equal to | |

| // max_amplitude | |

| // if no string is depressed but the max decay envelope has been reached, | |

| // reinforce it by setting current_amplitude equal to zero | |

| if ((string_depressed == 1) && (curr_attack_time < attack_time)) { | |

| curr_attack_time += 1; | |

| current_amplitude = current_amplitude + attack_inc; | |

| } else if ((string_depressed == 0) && (curr_decay_time < decay_time)) { | |

| curr_decay_time +=1; | |

| current_amplitude = current_amplitude – decay_inc; | |

| } else if (string_depressed == 1 && (curr_attack_time >= attack_time)) { | |

| current_amplitude = max_amplitude; | |

| } else if (string_depressed == 0 && (curr_decay_time >= decay_time)) { | |

| current_amplitude = 0; | |

| } | |

| // output produced sine wave from sum_strings to DAC_A | |

| DAC_data_A = (int)(current_amplitude*sum_strings) + 2048; | |

| // output amplitude envelope on DAC_B | |

| DAC_data_B = current_amplitude ; | |

| // test for ready | |

| while (TxBufFullSPI2()); | |

| // reset spi mode to avoid conflict with expander | |

| SPI_Mode16(); | |

| // DAC-A CS low to start transaction | |

| mPORTBClearBits(BIT_4); // start transaction | |

| // write to spi2 | |

| WriteSPI2(DAC_config_chan_A | (DAC_data_A & 0xfff) ); | |

| // test for done | |

| while (SPI2STATbits.SPIBUSY); // wait for end of transaction | |

| // MUST read to clear buffer for port expander elsewhere in code | |

| junk = ReadSPI2(); | |

| // CS high | |

| mPORTBSetBits(BIT_4); // end transaction | |

| // DAC-B CS low to start transaction | |

| mPORTBClearBits(BIT_4); // start transaction | |

| // write to spi2 | |

| WriteSPI2(DAC_config_chan_B | (DAC_data_B & 0xfff) ); | |

| // test for done | |

| while (SPI2STATbits.SPIBUSY); // wait for end of transaction | |

| // MUST read to clear buffer for port expander elsewhere in code | |

| junk = ReadSPI2(); | |

| // CS high | |

| mPORTBSetBits(BIT_4); // end transaction | |

| } | |

| // === print a line on TFT ===================================================== | |

| // used for debugging, not for the final prototype | |

| // string buffer | |

| static char buffer[60]; | |

| static char buffer2[60]; | |

| static char buffer3[60]; | |

| static char buffer4[60]; | |

| static char buffer5[60]; | |

| static char buffer6[60]; | |

| static char buffer7[60]; | |

| static char buffer8[60]; | |

| static char buffer9[60]; | |

| void printLine(int line_number, char* print_buffer, short text_color, short back_color) { | |

| // line number 0 to 31 | |

| /// !!! assumes tft_setRotation(0); | |

| // print_buffer is the string to print | |

| int v_pos; | |

| v_pos = line_number * 10; | |

| // erase the pixels | |

| tft_fillRoundRect(0, v_pos, 239, 8, 1, back_color);// x,y,w,h,radius,color | |

| tft_setTextColor(text_color); | |

| tft_setCursor(0, v_pos); | |

| tft_setTextSize(1); | |

| tft_writeString(print_buffer); | |

| } | |

| //=== Read beam break sensor =================================================== | |

| // variable to keep track of current value on PORTY expander | |

| unsigned int porty_val; | |

| // variable to keep track of previous value on PORTY expander | |

| unsigned int prev_porty_val; | |

| static PT_THREAD (protothread_beam(struct pt *pt)) { | |

| PT_BEGIN(pt); | |

| while(1) { | |

| // update prev_porty_val | |

| prev_porty_val = porty_val; | |

| // lock SPI2 so that port expander won’t be interrupted | |

| start_spi2_critical_section; | |

| // read PORTY expander | |

| porty_val = (readPE(GPIOY)); | |

| // unlock SPI2 since port expander functions are completed | |

| end_spi2_critical_section; | |

| // logic to determine which of the four beam break sensors have been | |

| // broken, which means that something has passed between the | |

| // receiver and emitter | |

| // if the beam is broken, the corresponding string variable is 0, | |

| // otherwise it’s -1 | |

| // Y7 corresponds to the E string | |

| // Y6 corresponds to the A string | |

| // Y5 corresponds to the D string | |

| // Y4 corresponds to the G string | |

| if ((porty_val & BIT_7) != 0x80) { | |

| e_string = 0; | |

| } else { | |

| e_string = –1; | |

| } | |

| if ((porty_val & BIT_6) != 0x40) { | |

| a_string = 0; | |

| } else { | |

| a_string = –1; | |

| } | |

| if ((porty_val & BIT_5) != 0x20) { | |

| d_string = 0; | |

| } else { | |

| d_string = –1; | |

| } | |

| if ((porty_val & BIT_4) != 0x10) { | |

| g_string = 0; | |

| } else { | |

| g_string = –1; | |

| } | |

| PT_YIELD_TIME_msec(5); | |

| } | |

| PT_END(pt); | |

| } | |

| //=== Read flex sensors ======================================================== | |

| // variable to keep track of current value on PORTZ expander | |

| unsigned int portz_val; | |

| // variable to keep track of previous value on PORTZ expander | |

| unsigned int prev_portz_val; | |

| static PT_THREAD (protothread_flex(struct pt *pt)) { | |

| PT_BEGIN(pt); | |

| while(1) { | |

| // update prev_portz_val | |

| prev_portz_val = portz_val; | |

| // lock SPI2 so that port expander won’t be interrupted | |

| start_spi2_critical_section; | |

| // read PORTZ expander | |

| portz_val = (readPE(GPIOZ)); | |

| // unlock SPI2 since port expander functions are completed | |

| end_spi2_critical_section; | |

| // logic to determine which string has been plucked, which means that | |

| // the flex sensor corresponding to that string has been | |

| // flexed, thereby outputting a low signal from the Schmitt | |

| // trigger connected to each | |

| // if the flex sensor is flexed, then the corresponding plucked variable | |

| // is set to 0, otherwise it’s -1 | |

| // each string is superceded by the next string to be plucked to | |

| // prevent the collision of the sine waves from each | |

| // (i.e. if E is plucked and G is plucked, then G will produce the | |

| // sound) | |

| // Z7 corresponds to the E string | |

| // Z6 corresponds to the A string | |

| // Z5 corresponds to the D string | |

| // Z4 corresponds to the G string | |

| if ((portz_val & BIT_7) != 0x80) { | |

| e_pluck = 0; | |

| a_pluck = –1; | |

| d_pluck = –1; | |

| g_pluck = –1; | |

| } else { | |

| e_pluck = –1; | |

| } | |

| if ((portz_val & BIT_6) != 0x40) { | |

| e_pluck = –1; | |

| a_pluck = 0; | |

| d_pluck = –1; | |

| g_pluck = –1; | |

| } else { | |

| a_pluck = –1; | |

| } | |

| if ((portz_val & BIT_5) != 0x20) { | |

| e_pluck = –1; | |

| a_pluck = –1; | |

| d_pluck = 0; | |

| g_pluck = –1; | |

| } else { | |

| d_pluck = –1; | |

| } | |

| if ((portz_val & BIT_4) != 0x10) { | |

| e_pluck = –1; | |

| a_pluck = –1; | |

| d_pluck = –1; | |

| g_pluck = 0; | |

| } else { | |

| g_pluck = –1; | |

| } | |

| // update prev_string_depressed | |

| prev_string_depressed = string_depressed; | |

| // string depressed is set if any one or more of the flex sensors on | |

| // PORTZ are flexed | |

| string_depressed = (((portz_val & BIT_7) != 0x80)|| ((portz_val & BIT_6) != 0x40) || ((portz_val & BIT_5) != 0x20)|| ((portz_val & BIT_4) != 0x10) ); | |

| // logic to start the next amplitude envelope attack/decay | |

| // if the previous state of the strings was that none were depressed | |

| // and the current state is that 1+ of the strings are depressed, | |

| // then a new attack needs to be started | |

| // if the previous start of the strings was that 1+ of the strings were | |

| // depressed and the current state is that none are depressed, | |

| // then a new decay needs to be started | |

| if (prev_string_depressed == 0 && string_depressed == 1) { | |

| //start new attack ! | |

| curr_attack_time = 0; | |

| current_amplitude = 0; | |

| } else if (prev_string_depressed == 1 && string_depressed == 0) { | |

| //start new decay ! | |

| curr_decay_time = 0; | |

| current_amplitude = max_amplitude; | |

| } | |

| // to prevent the flex sensor outputs from changing too quickly, as that | |

| // would lead to collisions on the DAC_A output | |

| PT_YIELD_TIME_msec(5); | |

| } | |

| PT_END(pt); | |

| } | |

| //=== Read distance sensor ===================================================== | |

| // variables to handle averaging of the distance sensor readings | |

| unsigned int circular_dist_buff[4] = {0,0,0,0}; | |

| unsigned int lasti, curi; | |

| unsigned int distance; | |

| static PT_THREAD (protothread_distance(struct pt *pt)) { | |

| PT_BEGIN(pt); | |

| while (1) { | |

| // set trig signal high | |

| mPORTASetBits(BIT_1); | |

| // keep it high for 1ms (although original program structure used 10µs) | |

| PT_YIELD_TIME_msec(1); | |

| // set trig signal low | |

| mPORTAClearBits(BIT_1); | |

| // clear the interrupt flag | |

| INTClearFlag(INT_IC1); | |

| // zero the timer | |

| WriteTimer3(0x000); | |

| // iir averaging distance captured from IC, based on last 4 readings | |

| circular_dist_buff[curi] = capture >> 3; | |

| distance = distance + circular_dist_buff[curi] – circular_dist_buff[lasti]; | |

| curi = (curi+1) & 0x03; | |

| lasti = (lasti+1) & 0x03; | |

| // logic for setting the appropriate fret value for each string | |

| // if one of the strings has been broken and the averaged distance | |

| // from the ultrasonic distance sensor is within the calibrated | |

| // range, then the fret variable for that given string is set | |

| // accordingly | |

| // each fret is set between 0 and 6, where frets 1-6 are calibrated for | |

| // a specific distance reading based on the corresponding | |

| // broken string | |

| if (e_string != –1 || a_string != –1 || d_string!= –1 || g_string!= –1) { | |

| if (e_string != –1 && distance < 320) e_fret = 6; | |

| if (a_string != –1 && distance < 270) a_fret = 6; | |

| if (d_string != –1 && distance < 320) d_fret = 6; | |

| if (g_string != –1 && distance < 350) g_fret = 6; | |

| if (e_string != –1 && distance < 260) e_fret = 5; | |

| if (a_string != –1 && distance < 240) a_fret = 5; | |

| if (d_string != –1 && distance < 290) d_fret = 5; | |

| if (g_string != –1 && distance < 320) g_fret = 5; | |

| if (e_string != –1 && distance < 230) e_fret = 4; | |

| if (a_string != –1 && distance < 200) a_fret = 4; | |

| if (d_string != –1 && distance < 250) d_fret = 4; | |

| if (g_string != –1 && distance < 260) g_fret = 4; | |

| if (e_string != –1 && distance < 180) e_fret = 3; | |

| if (a_string != –1 && distance < 170) a_fret = 3; | |

| if (d_string != –1 && distance < 220) d_fret = 3; | |

| if (g_string != –1 && distance < 240) g_fret = 3; | |

| if (e_string != –1 && distance < 140) e_fret = 2; | |

| if (a_string != –1 && distance < 140) a_fret = 2; | |

| if (d_string != –1 && distance < 180) d_fret = 2; | |

| if (g_string != –1 && distance < 200) g_fret = 2; | |

| if (e_string != –1 && distance < 120) e_fret = 1; | |

| if (a_string != –1 && distance < 120) a_fret = 1; | |

| if (d_string != –1 && distance < 150) d_fret = 1; | |

| if (g_string != –1 && distance < 180) g_fret = 1; | |

| } else { | |

| e_fret = 0; | |

| a_fret = 0; | |

| d_fret = 0; | |

| g_fret = 0; | |

| } | |

| PT_YIELD_TIME_msec(50); | |

| } | |

| PT_END(pt); | |

| } | |

| // === Main ====================================================== | |

| int main(void) { | |

| ANSELA = 0; //set port A digital | |

| ANSELB = 0; //set port B digital | |

| // set up digital input pin B3 | |

| mPORTASetPinsDigitalOut(BIT_1); | |

| // init the port expanders, both port Y and port Z | |

| start_spi2_critical_section; | |

| initPE(); | |

| // PortY on Expander ports as digital outputs | |

| mPortYSetPinsIn(0xff); //Set port Y as input | |

| mPortYEnablePullUp(BIT_3 | BIT_4 | BIT_5 | BIT_6 | BIT_7); | |

| mPortZSetPinsIn(0xff); //Set port Z as input | |

| //mPortZEnablePullUp(BIT_3 | BIT_4 | BIT_5 | BIT_6 | BIT_7); | |

| //EnablePullDownB(BIT_7 | BIT_8 | BIT_9); | |

| end_spi2_critical_section ; | |

| // set up DAC on big board | |

| // timer interrupt ////////////////////////// | |

| // Set up timer2 on, interrupts, internal clock, prescalar 1, toggle rate | |

| // at 40 MHz PB clock | |

| // 40,000,000/Fs = 909 : since timer is zero-based, set to 908 | |

| OpenTimer2(T2_ON | T2_SOURCE_INT | T2_PS_1_1, 908); | |

| // set up the timer interrupt with a priority of 2 | |

| ConfigIntTimer2(T2_INT_ON | T2_INT_PRIOR_2); | |

| mT2ClearIntFlag(); // and clear the interrupt flag | |

| // SCK2 is pin 26 | |

| // SDO2 (MOSI) is in PPS output group 2, could be connected to RB5 which is pin 14 | |

| PPSOutput(2, RPB5, SDO2); | |

| // control CS for DAC | |

| mPORTBSetPinsDigitalOut(BIT_4); | |

| mPORTBSetBits(BIT_4); | |

| // === Config timer3 free running ========================== | |

| // set up timer3 as a souce for input capture | |

| // and let it overflow for contunuous readings | |

| // use prescalar of 32 to make timer take longer to expire | |

| OpenTimer3(T3_ON | T3_SOURCE_INT | T3_PS_1_32, 0xffff); | |

| // === set up input capture ================================ | |

| OpenCapture1( IC_EVERY_FALL_EDGE | IC_INT_1CAPTURE | IC_TIMER3_SRC | IC_ON ); | |

| // turn on the interrupt so that every capture can be recorded | |

| ConfigIntCapture1(IC_INT_ON | IC_INT_PRIOR_3 | IC_INT_SUB_PRIOR_3 ); | |

| INTClearFlag(INT_IC1); | |

| // connect PIN 24 to IC1 capture unit | |

| PPSInput(3, IC1, RPB13); | |

| // divide Fpb by 2, configure the I/O ports. Not using SS in this example | |

| // 16 bit transfer CKP=1 CKE=1 | |

| // possibles SPI_OPEN_CKP_HIGH; SPI_OPEN_SMP_END; SPI_OPEN_CKE_REV | |

| // For any given peripherial, you will need to match these | |

| // clk divider set to 4 for 10 MHz | |

| SpiChnOpen(SPI_CHANNEL2, SPI_OPEN_ON | SPI_OPEN_MODE16 | SPI_OPEN_MSTEN | SPI_OPEN_CKE_REV, 4); | |

| // end DAC setup | |

| // build the sine lookup table | |

| // scaled to produce values between 0 and 4096 | |

| _Accum minoftable = 1; // keep track of min & max in additive synth table | |

| _Accum maxoftable = –1; | |

| int i,k; | |

| double value,x; | |

| for (i = 0; i < sine_table_size; i++){ | |

| // calc values for normal sine table | |

| sine_table[i] = (_Accum)(sin((float)i*6.283/(float)sine_table_size)); | |

| // do additive synth for this entry in sine_table_harmonix | |

| value = 0.0; | |

| for (k = 0; k < 6; k++) { // loop over the 5 harmonics | |

| x = (float)(k+1)*i*6.283/(float)sine_table_size; // current x value used for this harmonic | |

| //calculate the normal sine | |

| value += harmonix[k]*sin(x); | |

| } | |

| // update min & max in additive synth table | |

| if (value > maxoftable) maxoftable = value; | |

| if (value < minoftable) minoftable = value; | |

| // store calculated value | |

| sine_table_harmonix[i] = (_Accum) value; | |

| } | |

| // do a min-max scaling of sine_table harmonix to bring to range [0,1] | |

| // then multiply by 2 and add 1 to bring to range [-1,1] like sine_table | |

| for (i = 0; i < sine_table_size; i++) { | |

| sine_table_harmonix[i] = 2*((maxoftable-sine_table_harmonix[i])/(maxoftable-minoftable))-1; | |

| } | |

| //calc DDS_values entries, the note frequency *2^32 / sampling frequency | |

| int j; | |

| for(j=0; j < 23; j++) { | |

| ph_incr[j]=freqs[j]*two32/Fs; | |

| } | |

| // build the amplitude envelope parameters | |

| // bow parameters range check | |

| if (attack_time < 1) attack_time = 1; | |

| if (decay_time < 1) decay_time = 1; | |

| // set up increments for calculating bow envelope | |

| attack_inc = max_amplitude/(_Accum)attack_time ; | |

| decay_inc = max_amplitude/(_Accum)decay_time ; | |

| current_amplitude = 0; | |

| string_depressed = 0; | |

| prev_string_depressed = 0; | |

| curi = 0; | |

| lasti = 3; | |

| // === config the uart, DMA, vref, timer5 ISR =========== | |

| PT_setup(); | |

| // === setup system wide interrupts ==================== | |

| INTEnableSystemMultiVectoredInt(); | |

| PT_INIT(&pt_distance); | |

| PT_INIT(&pt_beam); | |

| PT_INIT(&pt_flex); | |

| // round-robin scheduler for threads | |

| while (1) { | |

| PT_SCHEDULE(protothread_distance(&pt_distance)); | |

| PT_SCHEDULE(protothread_beam(&pt_beam)); | |

| PT_SCHEDULE(protothread_flex(&pt_flex)); | |

| } | |

| } // main

Source: AIR BASS |